Segmentation

Image segmentation, also known as classification or labelization and sometimes referred to as reconstruction in some fields, is the process of partitioning an image into multiple segments or sets of voxels that share certain characteristics. The main goal of segmentation is to simplify or change the representation of an image into something that is more meaningful and easier to analyze. Once a segmentation has been completed, statistics can be easily computed. Another objective of segmentation is to organize an image into higher-level units, such as meshes, that are more efficient for visualization and further analysis.

You can use the Object Analysis tool to further analyze regions of interest that contain multiple objects (see Quantification).

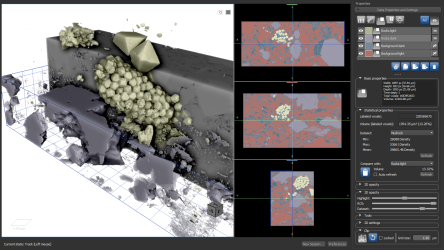

Segmented image (with clipping)

A region of interest is simply a set of labeled voxels within a shape that has a specific size and spacing. It does not contain data, only labeled voxels. However, when combined with a dataset of the same shape, you can extract parameters such as the minimum, maximum, and mean values of the dataset within the volume of the ROI. With Dragonfly you can create an unlimited number of regions of interest. You can also apply an ROI to different datasets taken of the same object with different modalities or at different times to refine an ROI.

In 3D views, regions of interest can be extracted from volumes to create views containing only segmented areas, or they can be subtracted to create views of everything except the regions of interest (see 3D Opacity).

In simple cases, environments may be well enough controlled so that the segmentation process reliably extracts only the parts that need to be analyzed further. In complex cases in which boundary insufficiences are present, such as missing edges or a lack of contrast between foreground and background regions, segmentation can be more difficult. In either case it is important to understand that:

- There is no universally applicable technique that will work for all types of images.

- No segmentation technique is perfect.

- Post-processing, by smoothing noisy images or enhancing edges, can accelerate some segmentation tasks (see Image Processing).

- If you are segmenting large volumes, it may be helpful to simplify the volume before segmentation (see Cropping Datasets).

- Advanced segmentation tools, such as histographic segmentation and trainable segmentation, are available as standalone modules (see Histographic Segmentation and Segmentation Trainer. Advanced operations, such as determining porosity and analyzing objects, are available in the context menu for selected ROIs (see ROI Pop-Up Menu).