Model Generator and Deep Learning Architectures

The Model Generator dialog for the Deep Learning Tool and the Segmentation Wizard provides settings for generating new deep models with a number of different architectures, as well as for downloading pre-trained models (see Pre-Trained Models).

Click the New button on the Model Overview panel to open the Model Generator dialog, shown below.

Model Generator dialog

The following table lists the settings that are available for generating semantic segmentation, super-resolution, and denoising deep models.

| Description | |

|---|---|

| Show architectures for |

Lets you filter the available architectures to those recommended for segmentation, super-resolution, and denoising.

Semantic Segmentation… Filters the Architecture list to models best suited for semantic segmentation, which is the process of associating each pixel of an image with a class label, such as a material phase or anatomical feature. Semantic segmentation models are suitable for binary and multi-class semantic segmentation tasks. Super-resolution… Filters the Architecture list to models best suited for super-resolution. Denoising… Filters the Architecture list to models best suited for denoising. |

| Architecture |

Lists the default models supplied with the Deep Learning Tool and the Segmentation Wizard. Architectures can be filtered by type (see Architectures and Editable Parameters for Semantic Segmentation Models and Architectures and Editable Parameters for Regression Models).

Note You can also download a selection of pre-trained models (see Pre-Trained Models). |

| Architecture description | Provides a short description of the selected architecture and a link for further information. |

| Model type | Lets you choose the type of model — Regression or Semantic segmentation — that you need to generate. |

| Class count | Available only for semantic segmentation model types, this parameter lets you enter the number of classes required. The minimum number of classes is '2', which would be for a binary segmentation task, while the maximum is '20' for multi-class segmentations. |

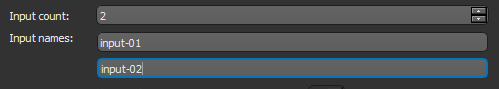

| Input count |

Lets you include multiple inputs for training. For example, when you are working with data from simultaneous image acquisition systems you might want to select each modality as an input.

If additional inputs are added, then you can name them in the 'Input names' edit boxes.

|

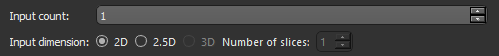

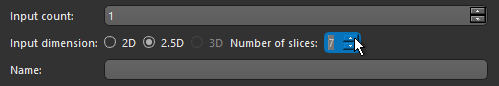

| Input dimension |

Lets you select an input dimension, as follows.

2D… The 2D approach analyzes and infers one slice of the image at a time. All feature maps and parameter tensors in all layers are 2D.

2.5D… The 2.5D approach analyzes a number of consecutive slices of the image as input channels. The remaining parts of the 2.5D model, including the feature maps and parameter tensors, are 2D, and the model output is the inferred 2D middle slice within the selected number of slices. You can choose the number of slices, as shown below.

3D… The 3D approach analyzes and infers the image volume in three-dimensional space. This approach is often more reliable for inference when compared to 2D and 2.5D approaches. but may need more computational memory to train and apply. Note Only U-Net 3D is a true 3D model that uses 3D convolutions. The number of input slices for this model is determined by the input size, which must be cubic. For example, 32x32x32. U-Net uses 2D convolutions, but can take 2.5D input patches for which you can choose the number of slices. You should also note that in some cases, 3D models can be more reliable for segmentation tasks. |

| Name | Lets you enter a name for the generated model. |

| Description | Lets you enter a description of your model. |

| Parameters | Lists the hyperparameters associated with the selected architecture and the default values for each (see Architectures and Editable Parameters for Semantic Segmentation Models and Architectures and Editable Parameters for Regression Models. |

The following tables list the deep learning architectures available for semantic segmentation, super resolution, and denoising. In addition, the editable hyperparameters available in the Model Generation dialog for each architecture are also listed.

| Description | |

|---|---|

| Attention U-Net |

This attention gate (AG) model, which was originally designed for medical imaging segmentation, automatically learns to focus on target structures of varying shapes and sizes while suppressing irrelevant regions in input images. By highlighting salient features only, the necessity of using explicit external tissue/organ localization module of cascaded convolutional neural networks (CNNs) is eliminated. Integrated into the standard U-Net architecture, AGs can increase model sensitivity and prediction accuracy.

The following hyperparameters can be edited for this architecture in the Model Generator: Depth level… Depth of the network, as determined by the number of pooling layers. Initial filter count… Filter count at the first convolution layer. Reference |

| Auto-Encoder |

Generic autoencoder.

The following hyperparameters can be edited for this architecture in the Model Generator: Initial filter count… Filter count at the first convolutional layer. Kernel size… Convolutional filters kernel size. Pooling size… Pooling window size. Reference |

| BiSeNet |

Bilateral segmentation network for real-time semantic segmentation.

The following hyperparameters can be edited for this architecture in the Model Generator: Patch size… Size of the input patches. Reference |

| DeepLabV3+ |

Encoder-decoder with atrous separable convolution for semantic image segmentation.

The following hyperparameters can be edited for this architecture in the Model Generator: Backbone… Backbone to use — Xception or MobileNetV2. Patch size… Fixed size of the input patches. Output stride… Ratio of the image size to the encoder output size. Reference |

| FC-DenseNet |

Semantic segmentation

model. The following hyperparameters can be edited for this architecture in the Model Generator: Model type… Model variation to be generated — FC-DenseNet56, FC-DenseNet67, or FC-DenseNet103 Reference |

| INet |

Convolutional network for biomedical image segmentation.

The following hyperparameters can be edited for this architecture in the Model Generator: Filter count… Filter count at each convolution layer. Reference |

| LinkNet |

This architecture focuses on speed and efficiency for semantic segmentation tasks. Compared to other algorithms, LinkNet can learn with a more limited number of parameters and operations and still deliver accurate results.

The following hyperparameters can be edited for this architecture in the Model Generator: Patch size… Fixed size of the input patches. Initial filter count… Filter count at each convolution layer. Reference |

| MA-Net |

Multi-scale attention network originally designed for liver and tumor segmentation.

The following hyperparameters can be edited for this architecture in the Model Generator: Patch size… Fixed size of the input patches. Depth level… Depth of the network, as determined by the number of pooling layers. Initial filter count… Filter count at the first convolution layer. Reference |

| PSPNet |

Semantic segmentation model.

The following hyperparameters can be edited for this architecture in the Model Generator: Backbone… Backbone to use — ResNet50 or ResNet101. Patch size… Fixed size of the input patches. Filter count… Filter count at each convolution layer. Reference |

| Sensor3D |

Semantic segmentation model using convolution LSTM.

The following hyperparameters can be edited for this architecture in the Model Generator: Depth level… Depth of the network, as determined by the number of pooling layers, and patch size. Initial filter count… Filter count at the first convolution layer. Reference |

| Swin UNETR |

A U-Net architecture combining a pure Swin transformer encoder and a deconvolutional decoder. The following hyperparameters can be edited for this architecture in the Model Generator: Patch size… Fixed size of the input patches. Depth level… Depth of the network, as determined by the number of pooling layers. Use batch normalization… If set to True, batch normalization will be applied. Encoder… Determines the encoder to use — Small, Base, or Large. Reference

|

| Trans UNet |

A U-Net architecture using transformer layers as a bottleneck. The architecture has been optimized with TransBTS hyperparameters and registers. The following hyperparameters can be edited for this architecture in the Model Generator: Patch size… Fixed size of the input patches. Depth level… Depth of the network, as determined by the number of pooling layers. Initial filter count… Filter count at the first convolution layer. Use batch normalization… If set to True, batch normalization will be applied. References

|

| U-Net |

All purpose model designed especially for medical image segmentation.

The following hyperparameters can be edited for this architecture in the Model Generator: Depth level… Depth of the network, as determined by the number of pooling layers. Initial filter count… Filter count at the first convolution layer. Reference |

| U-Net 3D |

3D implementation of U-Net.

You should note that only U-Net 3D is a fully 3D model that uses 3D convolutions. The number of input slices for this model is determined by the input size, which must be cubic. For example, 32x32x32. U-Net uses 2D convolutions, but can take 2.5D input patches for which you can choose the number of slices. You should also note that in some cases, 3D models can be more reliable for segmentation tasks. The following hyperparameters can be edited for this architecture in the Model Generator: Topology… The topology of the model. Initial filter count… Filter count at the first convolution layer. Use batch normalization… If set to True, batch normalization will be applied. Reference |

| U-Net SR |

Single image super resolution/super segmentation model based on a modified U-Net with mixed gradient loss.

The following hyperparameters can be edited for this architecture in the Model Generator: Scale… Ratio of the input size to the output size. Patch size… Fixed size of the input patches. Depth level… Depth of the network, as determined by the number of pooling layers. Initial filter count… Filter count at the first convolution layer. Convolution layer count… Number of times to repeat convolution layers in block. Reference |

| U-Net++ |

U-Net++ is a powerful architecture for medical image and semantic segmentation. This architecture is a deeply-supervised encoder-decoder network in which the encoder and decoder sub-networks are connected through a series of nested, dense skip pathways. The skip pathways help reduce the semantic gap between the feature maps of the encoder and decoder sub-networks.

The following hyperparameters can be edited for this architecture in the Model Generator: Depth level… Depth of the network, as determined by the number of pooling layers. Initial filter count… Filter count at the first convolution layer. Reference |

The following tables list the deep learning architectures available for super resolution and denoising. In addition, the editable hyperparameters available in the Model Generation dialog for each architecture are also listed.

| Description | |

|---|---|

| Auto-Encoder |

Generic autoencoder for semantic segmentation and denoising.

The following hyperparameters can be edited for this architecture in the Model Generator: Initial filter count… Filter count at the first convolutional layer. Kernel size… Convolutional filters kernel size. Pooling size… Pooling window size. Reference |

| EDSR |

Super resolution model.

The following hyperparameters can be edited for this architecture in the Model Generator: Scale… Ratio of the input size to the output size. Patch size… Fixed size of the input patches. Filter count… Filter count at each convolution layer. ResNet block count… The number of times to repeat ResNet blocks. Use Tanh activation… Determines if Tanh activation will be applied — True or False. Reference |

| FreqNet |

A frequency-domain image super resolution model that can explicitly learn the reconstruction of high-frequency details from low resolution images.

The following hyperparameters can be edited for this architecture in the Model Generator: ResNet block count… Is the number of times to repeat ResNet blocks. Size of kernels in filter bank… Determines the size of kernels in the filter bank. Stride of filter bank kernels… Determines the strides of kernels in the filter bank. Reference |

| Multi Stage Wavelet Denoise |

A multi-stage image denoising model based on a dynamic convolutional block, two cascaded wavelet transform and enhancement blocks, and a residual block.

The following hyperparameters can be edited for this architecture in the Model Generator: Initial filter count… Filter count at the first convolutional layer. Kernel size… Convolutional filters kernel size. Convolutional layer count… Number of times to repeat convolution layers in block. Reference |

| Multi-level Wavelet U-Net |

A U-Net style encoder/decoder network with discrete wavelet transform (DWT) encoding and inverse wavelet transform (IWT) decoding for image denoising and single image super-resolution. Haar wavelets are used to perform the transformations.

The following hyperparameters can be edited for this architecture in the Model Generator: Depth level… Depth of the network, as determined by the number of pooling layers Initial filter count… Filter count at the first convolutional layer. Kernel size… Convolutional filters kernel size. Convolutional layer count… Number of times to repeat convolution layers in block. Reference |

| Noise2Noise |

Denoising model.

The following hyperparameters can be edited for this architecture in the Model Generator: Initial filter count… Filter count at the first convolutional layer. Reference |

| Noise2Noise_SRResNet |

Denoising model.

The following hyperparameters can be edited for this architecture in the Model Generator: Initial filter count… Filter count at the first convolutional layer. ResNet block count… Number of times to repeat ResNet blocks. Reference |

| Non-local Fourier U-Net |

A U-Net style encoder/decoder network using non-local fast

Fourier convolution (NL-FCC) layers that has shown promising results in image super resolution. The NL-FFC layer combines local and global features in spectral domain and spatial domain.

The following hyperparameters can be edited for this architecture in the Model Generator: Depth level… Depth of the network, as determined by the number of pooling layers Initial filter count… Filter count at the first convolutional layer. Kernel size… Convolutional filters kernel size. Reference |

| U-Net |

All purpose model designed especially for medical image segmentation.

The following hyperparameters can be edited for this architecture in the Model Generator: Depth level… Depth of the network, as determined by the number of pooling layers. Initial filter count… Filter count at the first convolution layer. Reference |

| U-Net 3D |

3D implementation of U-Net.

You should note that only U-Net 3D is a fully 3D model that uses 3D convolutions. The number of input slices for this model is determined by the input size, which must be cubic. For example, 32x32x32. U-Net uses 2D convolutions, but can take 2.5D input patches for which you can choose the number of slices. You should also note that in some cases, 3D models can be more reliable for segmentation tasks. The following hyperparameters can be edited for this architecture in the Model Generator: Topology… The topology of the model. Initial filter count… Filter count at the first convolution layer. Use batch normalization… If set to True, batch normalization will be applied. Reference |

| U-Net SR |

Single image super resolution/super segmentation model based on a modified U-Net with mixed gradient loss.

The following hyperparameters can be edited for this architecture in the Model Generator: Scale… Ratio of the input size to the output size. Patch size… Fixed size of the input patches. Depth level… Depth of the network, as determined by the number of pooling layers. Initial filter count… Filter count at the first convolution layer. Convolution layer count… Number of times to repeat convolution layers in block. Reference |

| WDSR |

Super resolution model. The following hyperparameters can be edited for this architecture in the Model Generator: Model type… Model variation to be generated — WDSR-A or WDSR-B. Scale… Ratio of the input size to the output size. Patch size…Fixed size of the input patches. Filter count… Filter count at each convolution layer. ResNet block count… The number of times to repeat ResNet blocks. ResNet block expansion… The ratio to multiple the number of filters in the ResNet block expansion layer. Reference |

Refer to the following topics for information about generating models for specific tasks: